We have always used Cloud Loadbalancer to expose our service to the outside world. Let’s see how we can optimize our Kubernetes service using an Ingress Controller.

Ingress An Ingress is a more customizable way to expose our traffic than using a traditional Cloud LoadBalancer.For each service, we configure an ingress object that defines how to route traffic. Ingress object configures access to services outside the cluster. Ingress is a design for web traffic, mainly HTTP and HTTPS, and allows it to customize routes with options for securing traffic with SSL and routing to different backend services based on Hostnames and URL paths. Once we define our ingress resources, we set up an ingress controller.

Ingress Controller

An ingress controller itself is the actual application that routes the traffic based on their resources(ingress definitions). But the very frontend exposed to the public will usually have a single cloud LoadBalancer. Still, by using the ingress controller to manage all the traffic that comes through it, we consolidate all our routing and service logic into a single resource.

The most popular ingress controller is Nginx Ingress Controller as it gives the power on Nginx Webserver under the hood to help direct our traffic. But other ingress controllers are also available to use like Istio Ingress, HAProxy Ingress, etc.

Let’s look at a diagram so we can better understand this.

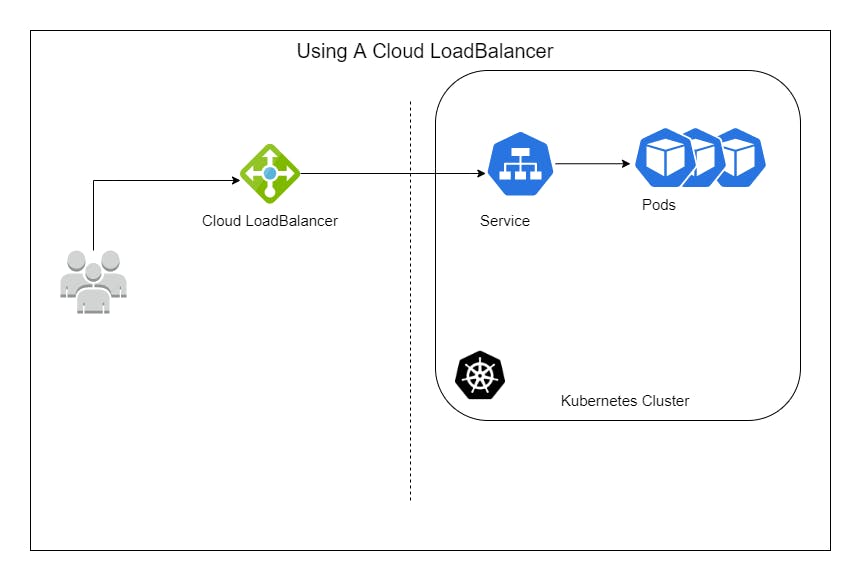

On the right-hand side of the dotted line, we have our Kubernetes cluster.

We’ve got our pods running our application and a service object defining access to it. We then ask our cloud service providers (AWS, GCP, Azure, etc.) to provision a LoadBalancer to expose that service to the outside world.

But with this model, if we want to add extra services and expose them to the outside world, we provision more load balancers. We will be a bit limited in how we can configure these load balancers, and as we add more service, we’ll start paying for more load balancer forwarding roles.

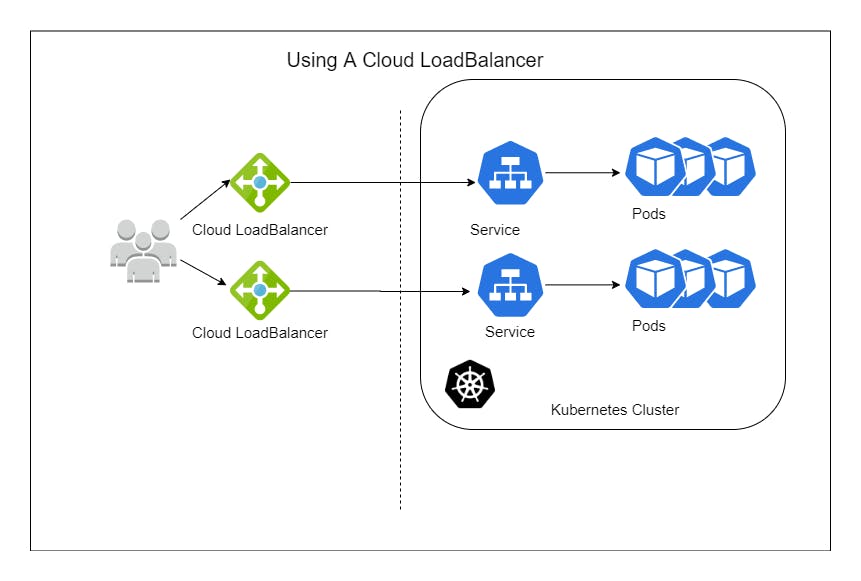

On the right-hand side of the dotted line, we have our Kubernetes cluster.

We’ve got our pods running our application and a service object defining access to it. We then ask our cloud service providers (AWS, GCP, Azure, etc.) to provision a LoadBalancer to expose that service to the outside world.

But with this model, if we want to add extra services and expose them to the outside world, we provision more load balancers. We will be a bit limited in how we can configure these load balancers, and as we add more service, we’ll start paying for more load balancer forwarding roles.

mage for post

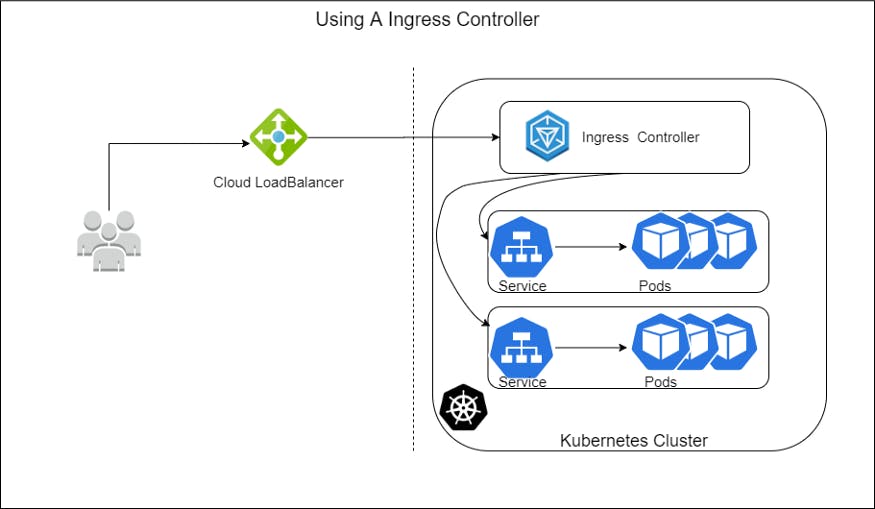

Alternatively, by using the ingress controller model, we’ll require a single cloud load balancer. All the traffic will first route to the ingress controller. Ingress controller then accordingly routes this traffic to the services in our cluster.

mage for post

Alternatively, by using the ingress controller model, we’ll require a single cloud load balancer. All the traffic will first route to the ingress controller. Ingress controller then accordingly routes this traffic to the services in our cluster.

mage for post

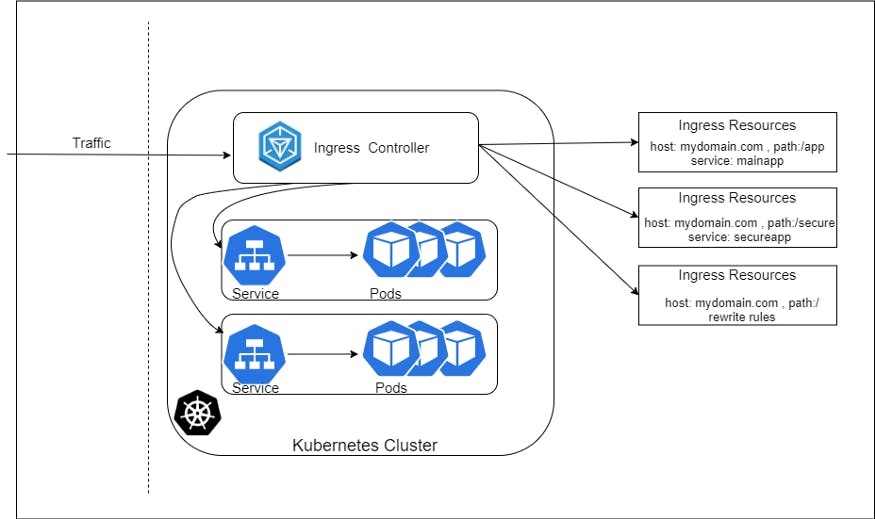

Let’s take a closer look at how the ingress controller works!

mage for post

Let’s take a closer look at how the ingress controller works!

mage for post

When traffic hits the ingress controller, it checks the ingress resources for its routing logic. Here it can decide to route traffic to different backend services based on Hostname or paths in the URL. We can even annotate an ingress resource with rewrite rules.

mage for post

When traffic hits the ingress controller, it checks the ingress resources for its routing logic. Here it can decide to route traffic to different backend services based on Hostname or paths in the URL. We can even annotate an ingress resource with rewrite rules.

Nginx Ingress Controller Nginx Ingress Controller is a prevalent option for Ingress Controller in general. Nginx Ingress Controller is available as a stable Helm Shot and can be installed in minutes. It supports running as a regular deployment or a Daemon set. If you select a regular deployment, Nginx Ingress Controller also supports horizontal pod autoscaling. The Kubernetes project itself also supports it, so if it fits your needs, it’s a great choice. And Just by using some ingress controllers(or maybe one), you can save a lot of money that you spent on provisioning multiple LoadBalancers.